TL;DR: LLM chains are structured pipelines that orchestrate prompts, model calls, retrievals, and tools so large language models can solve multi-step tasks and make decisions more reliably. Use chains when you want predictable flows; use agents when the model needs to decide which action to take.

This post explains patterns (stuffing, map-reduce, refine), decision-making architectures (rule-gates, router→worker, agentic planning), testing/ops, and GDPR/data-residency considerations for production.

So, what are they & why does it matter?

An LLM chain is a developer-defined sequence of steps, prompt templates, LLM calls, tool invocations (search, SQL, APIs), retrievers, and validators, executed in order to accomplish a task that a single prompt would struggle with. Chains let you split complexity, ground outputs with retrieval, and add deterministic checks between model calls so the final output is more reliable than a single-shot generation. The LangChain project popularized this composition-first approach and offers canonical tutorials and utilities to build chains quickly.

Chains are the “combinator” pattern for LLM apps: small, testable pieces glued together to form larger behavior. That matters for both engineering hygiene and SEO, readers search for concrete names and patterns (map-reduce, refine, retrieval-augmented generation), so use those terms in your headings and first paragraph.

Core chain patterns

These are the building blocks you’ll use repeatedly:

- Stuffing (Concat): Put the retrieved docs or context straight into the prompt. Simple, but token-inefficient and brittle once documents grow large. Good for small, high-relevance contexts.

- Map-Reduce Chain: Split a large document into chunks (map: summarize each chunk), then combine those summaries (reduce: synthesize final answer). Ideal for long documents and efficient token usage. Many frameworks expose

MapReduceChainhelper utilities. - Refine Chain: Produce a draft, then iteratively refine it with additional context or passes. Great for long-form generation or multi-pass QA where each pass corrects or expands the previous output.

- Sequential / Pipeline Chain: Deterministic series of tasks: extract → validate → transform → output. Workflows with strong business rules prefer this approach.

- Agentic Chains (Agents): Dynamic, the LLM decides which tools to call and when (search, calculators, APIs). Agents are flexible for exploratory tasks but require strong guardrails for safety and cost control. The LangChain docs show typical patterns like plan → act → observe → repeat.

Taxonomy: chains vs agents vs chain-of-thought

- Chains: Developer-defined, ordered flows, predictable and testable.

- Agents: Model decides control flow and tool usage, flexible but harder to constrain.

- Chain-of-Thought (CoT) Prompting: Internal prompting strategy that encourages models to show step-by-step reasoning. Can be embedded into chains or agents to improve multi-step reasoning accuracy. Research shows CoT substantially improves reasoning for many tasks when used properly.

Designing decision-making logic: patterns, tradeoffs & examples

Rule-based gating + LLM refinement

- How: Deterministic rules filter inputs (e.g., block PII, enforce rate limits). Approved items go through LLM chains for interpretation.

- Pros: Predictable and safe.

- Cons: Brittle for open-ended inputs.

- When to use: Legal/finance workflows, safety-critical paths.

Classifier → Router → Worker (Hybrid)

- How: Fast classifier routes requests to specialized chains (billing, support, etc.). Each worker chain contains domain logic and retrieval.

- Pros: Cost & latency optimized.

- Cons: Needs labeled data; routing errors possible.

- When to use: Multi-domain support systems.

LLM-as-router

- How: Use a small LLM prompt to choose among options when training data is scarce.

- Pros: Fast to bootstrap.

- Cons: Router decisions can drift; needs monitoring.

- When to use: Early prototypes or fuzzy domain boundaries.

Agentic iterative planning

- How: Model plans multiple steps, calls tools, reads results, and replans until completion.

- Pros: Highly flexible for arbitrary workflows.

- Cons: Cost, complexity, and potential unsafe actions without guardrails.

- When to use: Research assistants, exploratory agents, complex automation.

Ensembles & self-consistency

- How: Run multiple strategies and combine or vote on outputs using CoT and self-consistency to reduce hallucination.

- Pros: Higher accuracy in critical tasks.

- Cons: Higher cost and engineering overhead.

Decision checklist when choosing an architecture: safety requirements, latency targets (p90/p99), cost per request, observability needs, human-in-the-loop requirements, and regulatory constraints (GDPR/data residency).

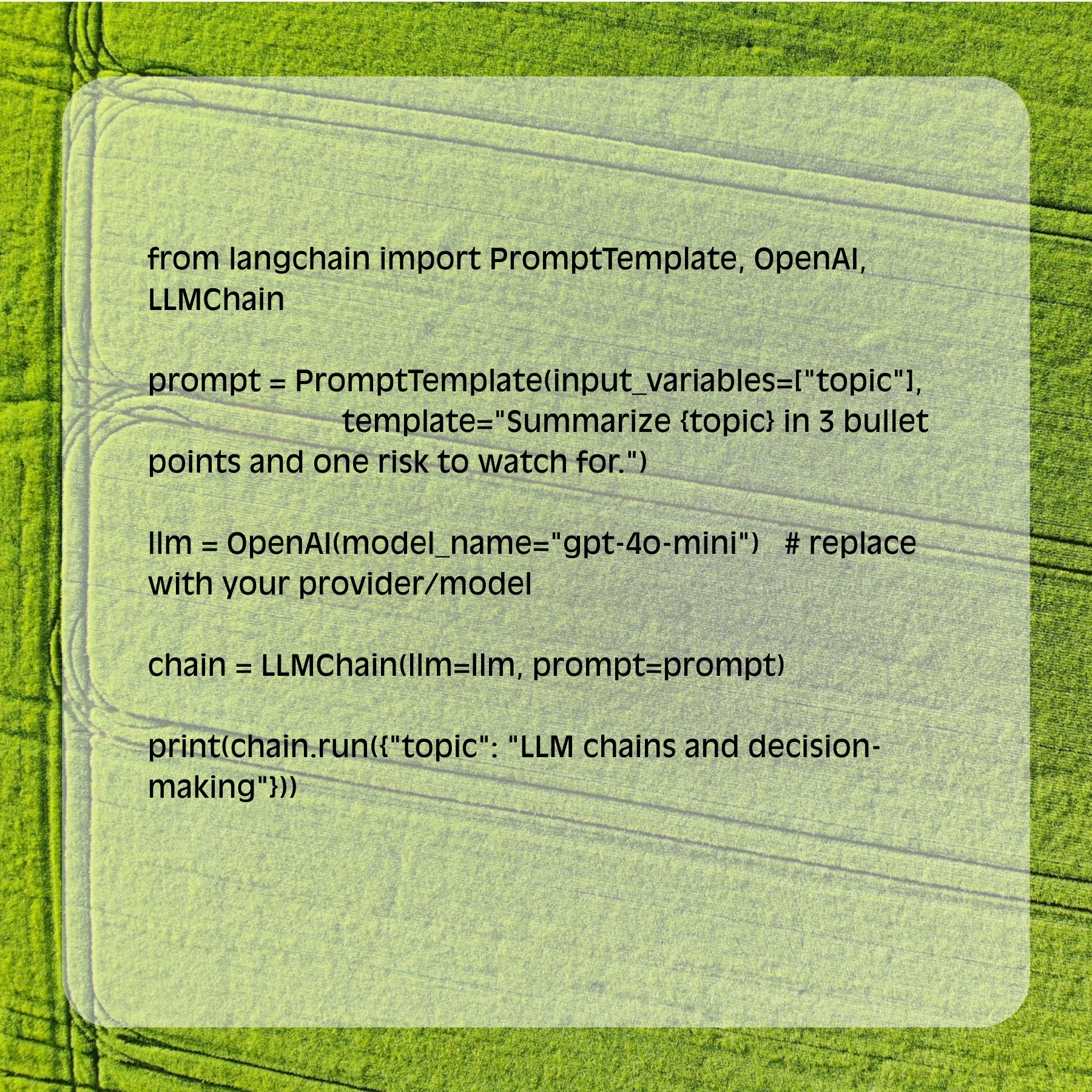

Minimal LangChain example

Python demo showing the essence of an LLMChain-like call (adapt to your LangChain version/provider):

For production chains, combine retrieval (vector DB), validators, and caching between calls. Refer to LangChain tutorials for MapReduceChain and agent examples.

Testing, monitoring & metrics (must-haves)

Track both correctness and operational behavior:

- Task success rate (human labeled)

- Hallucination incident rate per 1k requests

- Latency p50/p90/p99 and cost per user session

- Tool error rate (external API failures)

- Router misclassification rate (if using classifier routing)

Instrument decisions: log inputs, intermediate steps, chosen tools, and final output. Canary new chain logic on a small traffic slice first and compare success & cost before full rollout.

Production practices: scaling, fallbacks & cost control

- Cache outputs for identical requests.

- Use retrieval/RAG to ground answers and reduce hallucination.

- Circuit breakers and timeouts for external tool calls.

- Model fallback strategy: route to a cheaper model with degraded UX when primary model is unavailable.

- Rate limits & budget alerts to avoid runaway costs.

Privacy, compliance & GEO considerations

Deploying LLM chains in the wild touches regulation. The European Data Protection Board (EDPB) and other authorities have published guidance on LLM privacy risks, anonymization, and data-protection-by-design, essential reading for EU deployments and helpful for others. If your chain handles personal data, perform a DPIA, document data flows, and confirm data residency constraints with your provider.

GEO practical notes

- EU: Follow GDPR/EDPB guidance, keep records of processing, and consider on-prem or region-locked deployments for sensitive data.

- US: Sectoral rules (healthcare/HIPAA, finance) require additional safeguards.

LLM chains are just the start. As agentic planning and multi-model orchestration mature, there’s a lot more ground to cover. If you want to explore how these approaches fit into your product roadmap, get in touch with us, we’d be happy to share what’s worked (and what hasn’t) in production.

Developer Tips & Insights

Building a HIPAA-safe Patient Dashboard with Next.js + Node.js- powered by Brightree data through MuleSoft