Beyond scripted bots, we build chatbots that understand, respond, and actually solve problems.

We define what your chatbot should do: sell, support, onboard, guide, or all of the above, and map its tone, behavior, and domain expertise.

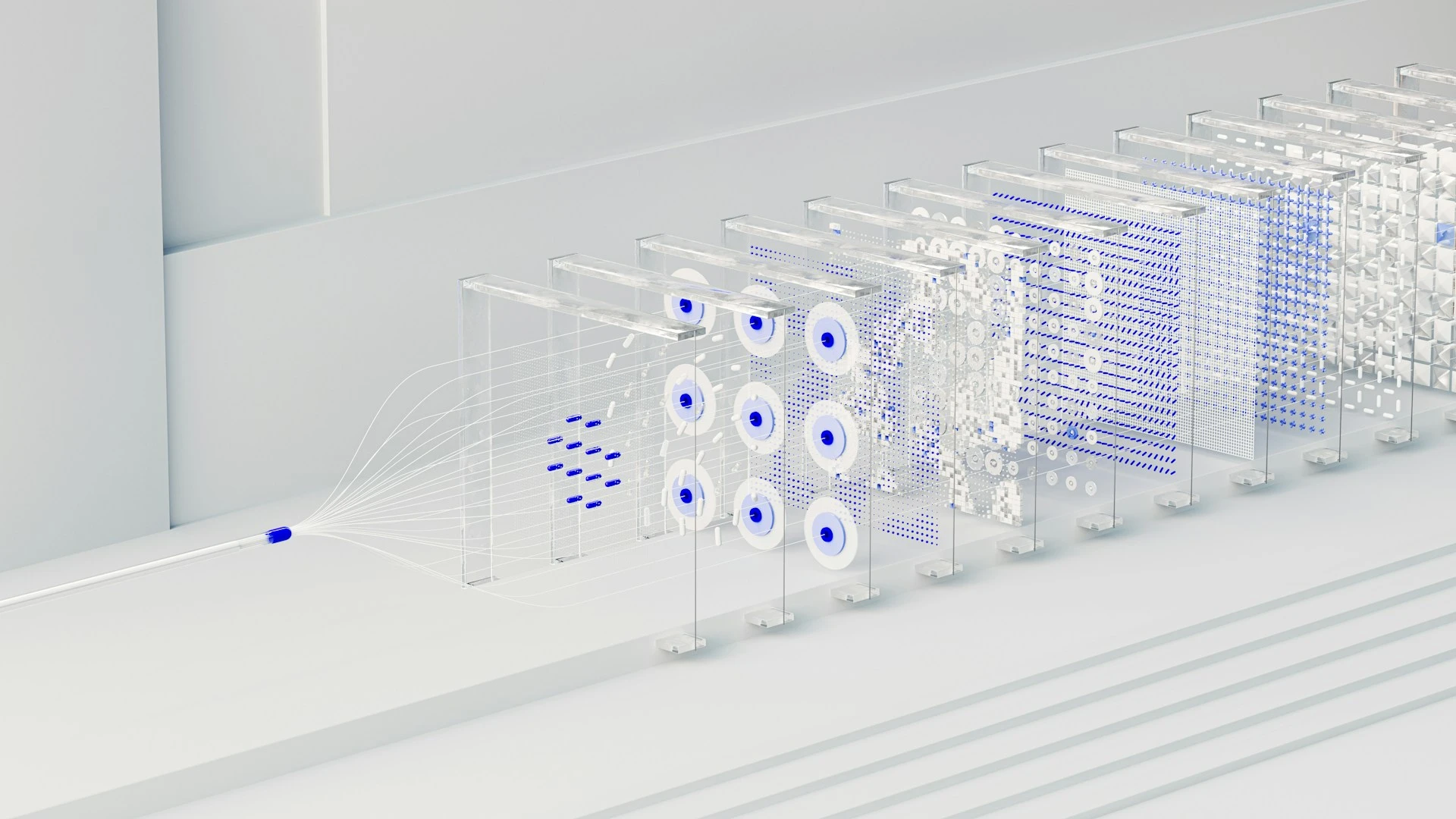

We connect your chatbot to internal docs, FAQs, product data, CRMs, or via a RAG model, so it has actual knowledge to pull from.

We design structured prompts and “personas” that guide how the bot communicates. Formal? Friendly? Technical? We tailor it to your brand.

We add context handling, multi-turn memory, and safe fallbacks, ensuring the chatbot doesn't hallucinate or go off-script.

We monitor real chats, train the bot with new inputs, and fine-tune it for better accuracy, tone, and flow.

Powerful coding foundations that drive scalable solutions.

Enhanced tools and add-ons to accelerate development.

Secure, flexible, and future-ready infrastructure in the cloud.

LLMs & GenAI

We create conversational AI that goes beyond scripted bots, delivering natural, accurate, context-aware interactions that automate support, boost sales, and guide users without hallucinations or generic replies.

We define clear roles and goals (support agent, sales assistant, onboarding guide), layer in RAG to pull precise answers from your docs, FAQs, product data, CRMs, or helpdesks, and engineer prompts/personas for brand-perfect tone and behavior. This LLM chatbots development approach delivers enterprise-grade, customizable solutions that reduce operational load, increase engagement, and provide measurable ROI, starting with focused MVPs on high-volume intents for quick value and scaling reliably as your needs grow.

Build chat assistants with real understanding, robust knowledge, and conversational AI that delivers answers, not just responses.