Turn your AI into a specialist. One prompt at a time

We begin by identifying the user goals, domain-specific knowledge, and tone required, whether the AI is acting as a recruiter, legal advisor, product trainer, or analyst.

We build optimized prompts using system roles, few-shot examples, and formatting guards, refined through testing for consistency, hallucination control, and speed.

For tasks requiring logic or steps, we design chained prompts, where each output feeds the next step, creating a seamless reasoning loop.

We plug the engineered prompts into your apps, CRMs, chat tools, or autonomous agents, ensuring performance in real-world conditions.

Powerful coding foundations that drive scalable solutions.

Enhanced tools and add-ons to accelerate development.

Secure, flexible, and future-ready infrastructure in the cloud.

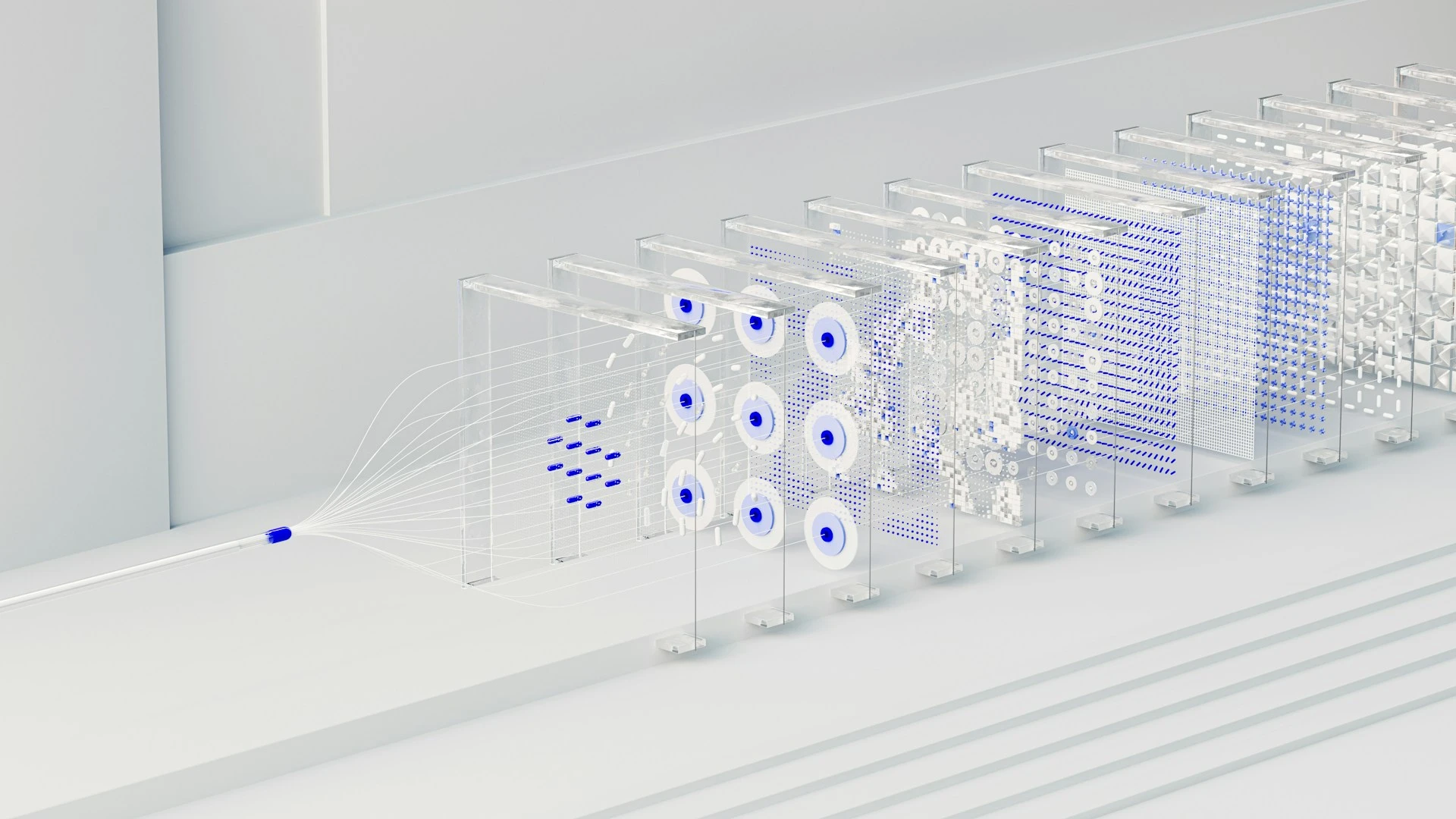

LLMs & GenAI

Our prompt engineers turns generic AI responses into sharp, domain-specific intelligence, by designing prompts that make models act like your experts while slashing errors and costs.

We analyze use cases to map roles (senior analyst, recruiter, legal advisor), then build layered prompts with strict system instructions, step-by-step reasoning, 2–5 high-quality few-shot examples, clear constraints, and enforced formats like JSON or Markdown tables. This prompt engineering approach delivers consistent, actionable results that align with your business tone and goals, reducing rework, improving user trust, and maximizing ROI from LLMs like OpenAI, Anthropic, Gemini, and Mistral without constant model swaps.

Build & refine prompts for logic, context, and workflow so every AI response echoes your goals, accuracy, and unique domain knowledge.