AI that fits your business

We dive deep into your current challenges, size, technical maturity, and goals. We prioritize quick wins with high ROI — whether you need automated reports or customer-facing assistants.

We help you choose the right model stack: · Small businesses: Efficient, affordable models like Phi-3, Gemma, or Claude Haiku · Mid-size: Hosted LLMs or fine-tuned open models (Mixtral, LLaMA 3) · Enterprises: Custom-trained models with secure API or on-premise deployment

From prompt design and fine-tuning to building secure APIs, workflows, and dashboards — we handle it all.

We build feedback loops, data validation systems, and compliance guardrails tailored to your scale — so you stay agile, yet safe.

Powerful coding foundations that drive scalable solutions.

Enhanced tools and add-ons to accelerate development.

Secure, flexible, and future-ready infrastructure in the cloud.

Smart insights and visualization that bring data to life.

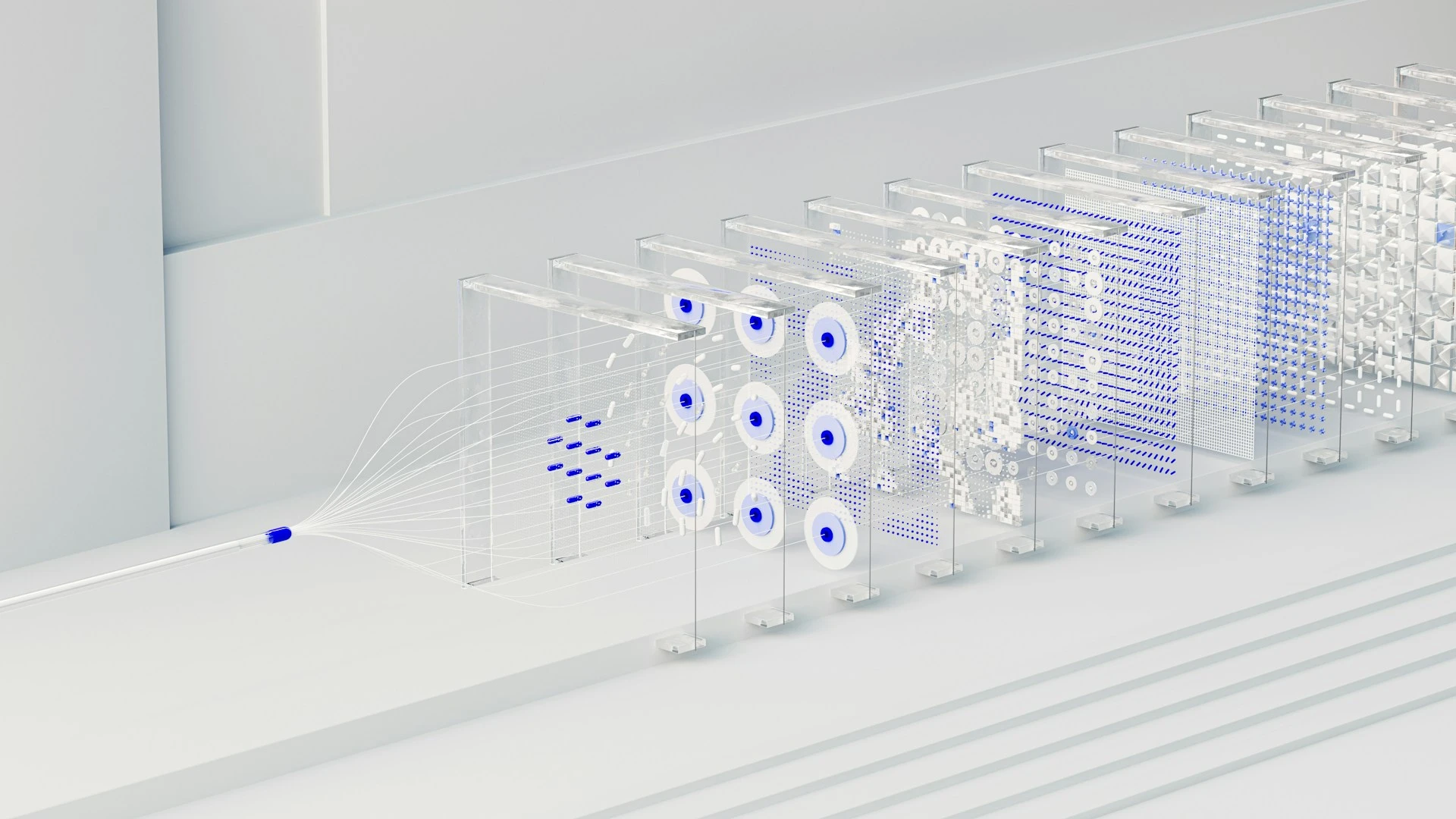

LLMs & GenAI

Deploying LLMs for small, medium & enterprise businesses doesn't require massive budgets or endless experiments, our approach matches the right model and architecture to your size, stack, and goals for fast, measurable impact.

We discover high-ROI use cases like automated reports, customer assistants, content generation, or internal copilots, then select efficient models, Phi-3, Gemma, Claude Haiku for small businesses; Mixtral, LLaMA 3 fine-tuned for mid-size; custom or on-prem for enterprises, while controlling costs through caching, routing, truncation, and per-task budgeting.

Deploy right-sized LLMs and GenAI for reports, chatbots, and automation, efficient for small, robust for enterprise, always secure.