Let your AI speak facts, not just patterns

We identify where your most valuable content lives, PDFs, Confluence, Google Drive, Notion, Zendesk, SQL DBs, SharePoint, and define retrieval logic.

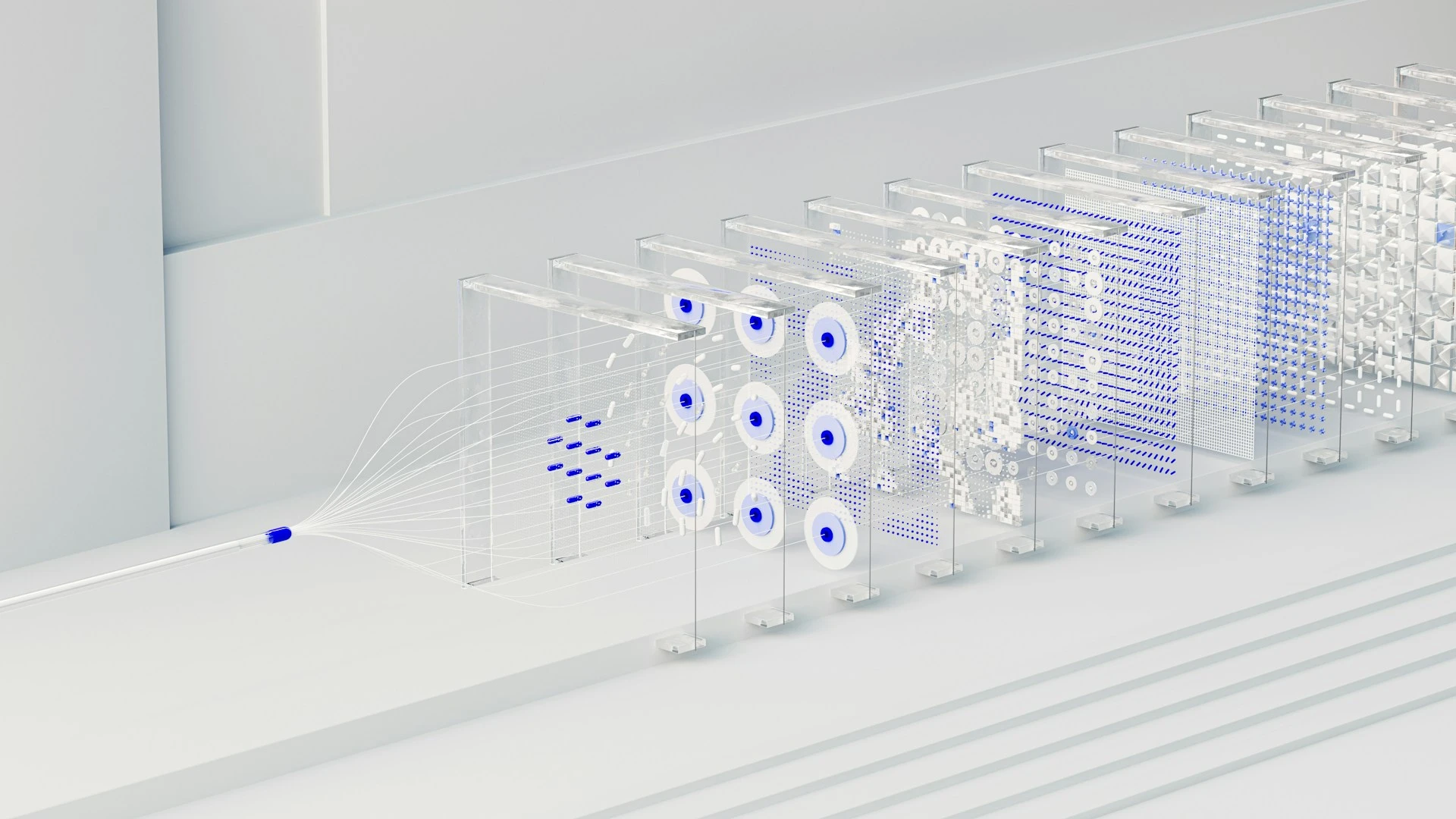

Your documents are broken into logical “chunks” (semantic units) and embedded using vector databases like FAISS, Pinecone, or Weaviate, so the system can understand meaning, not just keywords.

We connect a retriever module (like Elasticsearch or vector DBs) with a language model like GPT, Claude, or open-source LLMs, forming the RAG loop.

We add formatting rules, citation references, response filters, and escalation paths, so answers are not just smart, but business-ready.

Powerful coding foundations that drive scalable solutions.

Enhanced tools and add-ons to accelerate development.

Secure, flexible, and future-ready infrastructure in the cloud.

LLMs & GenAI

We fix the core problem with plain LLMs, hallucinations and outdated info, by building retrieval-augmented systems that pull precise context from your actual data sources before generating any response.

We map your knowledge across PDFs, Confluence, SharePoint, SQL DBs, Zendesk, and more, then handle semantic chunking, embedding with vector databases like Pinecone, Weaviate, or FAISS, and tight integration via LangChain or Haystack with models from OpenAI, Anthropic, Gemini, LLaMA, or Mistral. The result: production-grade RAG that reduces hallucinations reliably, handles long-tail content and frequent updates cost-effectively, scales with caching/sharding/batching, and turns AI from experimental to essential business intelligence without constant retraining.

Deliver search and retrieval systems that link content, workflows, and cloud data, ensuring every AI output is trustworthy, accurate, and grounded.